🏆 LLM Go Tournament ○● (Baduk, Weiqi)¶

Can Foundation Models play Go via Text Protocol alone & what can we learn from it?¶

by Victor Zommers for Pydata London

📢 Talk Inspiration:¶

♞ Kaggle Chess Text Input (FEN/PGN) Tournament¶

Best LLM Score is < Median Human Lichess Score (Glicko2 to Elo conversion)

♞ Kaggle Tournament Table¶

🌱 Why Should We Care?¶

👉 AI Observability and Eval: Capacity of General Intelligence in a Specialist Task (reuse reasoning strategies across domains)¶

👉 Curse of Dimensionality in a Specialist Intelligence (Overfitting): by simplifying the problem we increase specificity at expense of robustness and flexibility¶

| t | Specialist AI | Generalist AI |

|---|---|---|

| Today | Chess Bot | Chess Bot |

| Tomorrow | Chess Bot | Managing Food procurement for a Restaurant |

| Future | Chess Bot | Commanding a frontline in a Skynet Invasion |

Differences: |

Chess ♞ |

Go ○● |

Opening Theory Depth |

15-20 Moves |

50+ Moves |

Branching Factor |

~35 Legal Moves |

~250-300 Legal Moves |

Game Tree Complexity |

10^120 (Shannon number) |

10^360 (> no of atoms in universe) |

Strategy |

Tactical, positional |

Strategic, spatial |

So I developed an implementation to connect LLMs to GTP (Go Text Protocol) to create My Own Go Game Engine¶

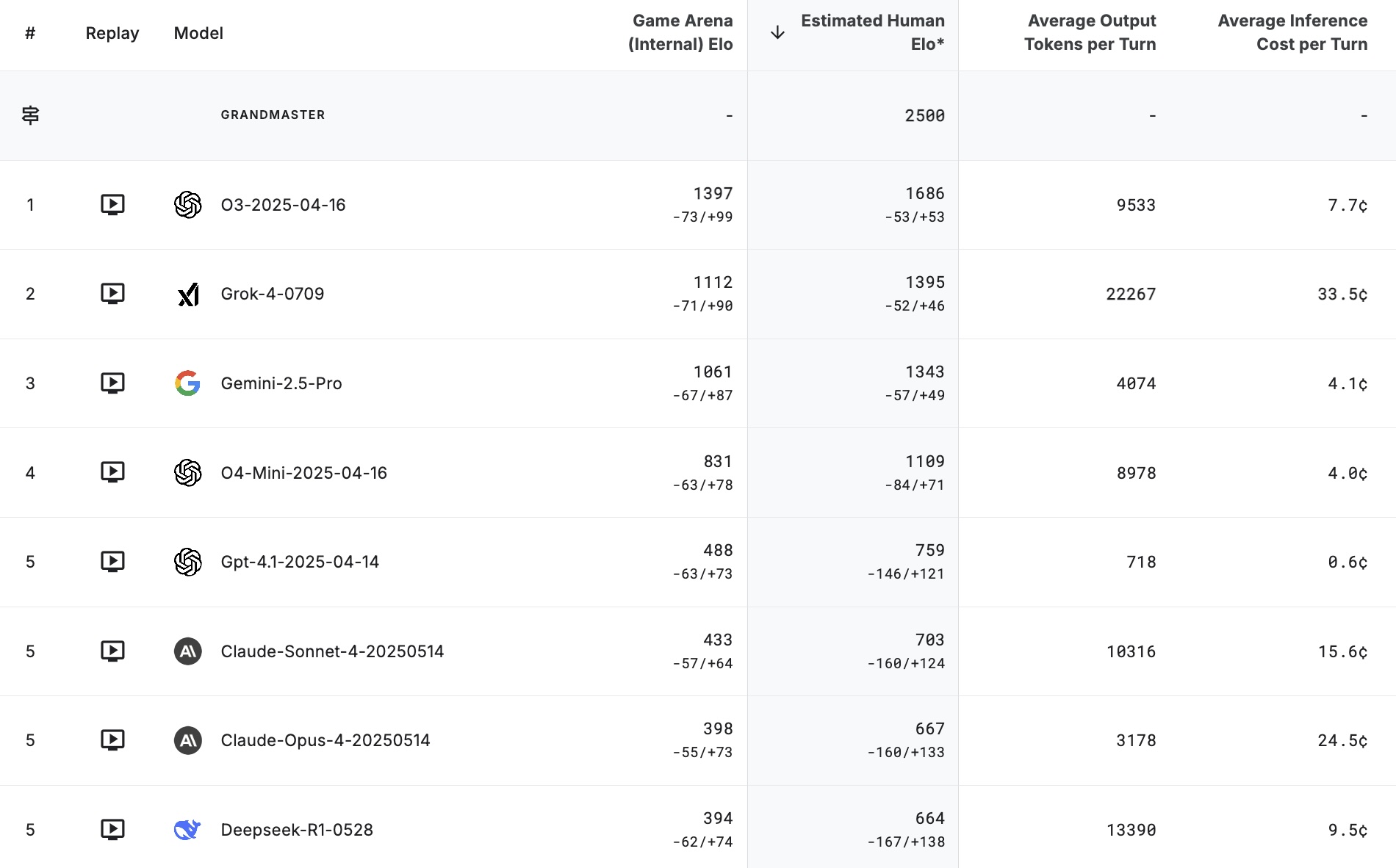

⚛️ Our Contestors are:¶

👉 Wally: 400-lines of code developed in 1981 to run with 1 KB of RAM (30 kyu), only 7 patterns/rules¶

👉 KataGo: Flagship Go AI (>9 dan or >3000 Elo)¶

👉 GPT-4o¶

👉 o4-mini¶

👉 Grok-4-fast-reasoning¶

👉 DeepSeek-R1-0528¶

⚖️ Rules (Similar to Kaggle)¶

👉 Up to 3 Illegal Moves (attempts per game), then automatic resignation¶

👉 LLMs can choose to resign at their own discretion¶

👉 Illegal moves in Go, defined as:¶

🚩 Invalid Input (not a valid coordinate)¶

🚩 Point is already occupied¶

🚩 Suicide Move when Atari (ex. Seki fights)¶

🚩 Ko Violation (repeats a pattern)¶

Win / Loss |

GPT-4o |

o4-mini |

grok-4 |

DeepSeek-R1 |

Wally |

KataGo |

GPT-4o |

X |

0/4 (M: -19) |

3/2 (M: -32.6) |

0/10 (M: -61) |

0/10 (M: -178) |

|

o4-mini |

4/0 (M: +19) |

X |

3/2 (M: -61.1) |

2/3 (M: -39.5) |

||

grok-4 |

2/3 (M: +61.1) |

X |

4/1 (M: -46.3) |

|||

DeepSeek-R1 |

2/3 (M: +32.6) |

3/2 (M: +39.5) |

1/4 (M: +46.3) |

X |

||

Wally |

10/0 (M: +61) |

X |

0/5 (M: +234) |

|||

KataGo |

10/0 (M: +178) |

5/0 (M: -234) |

X |

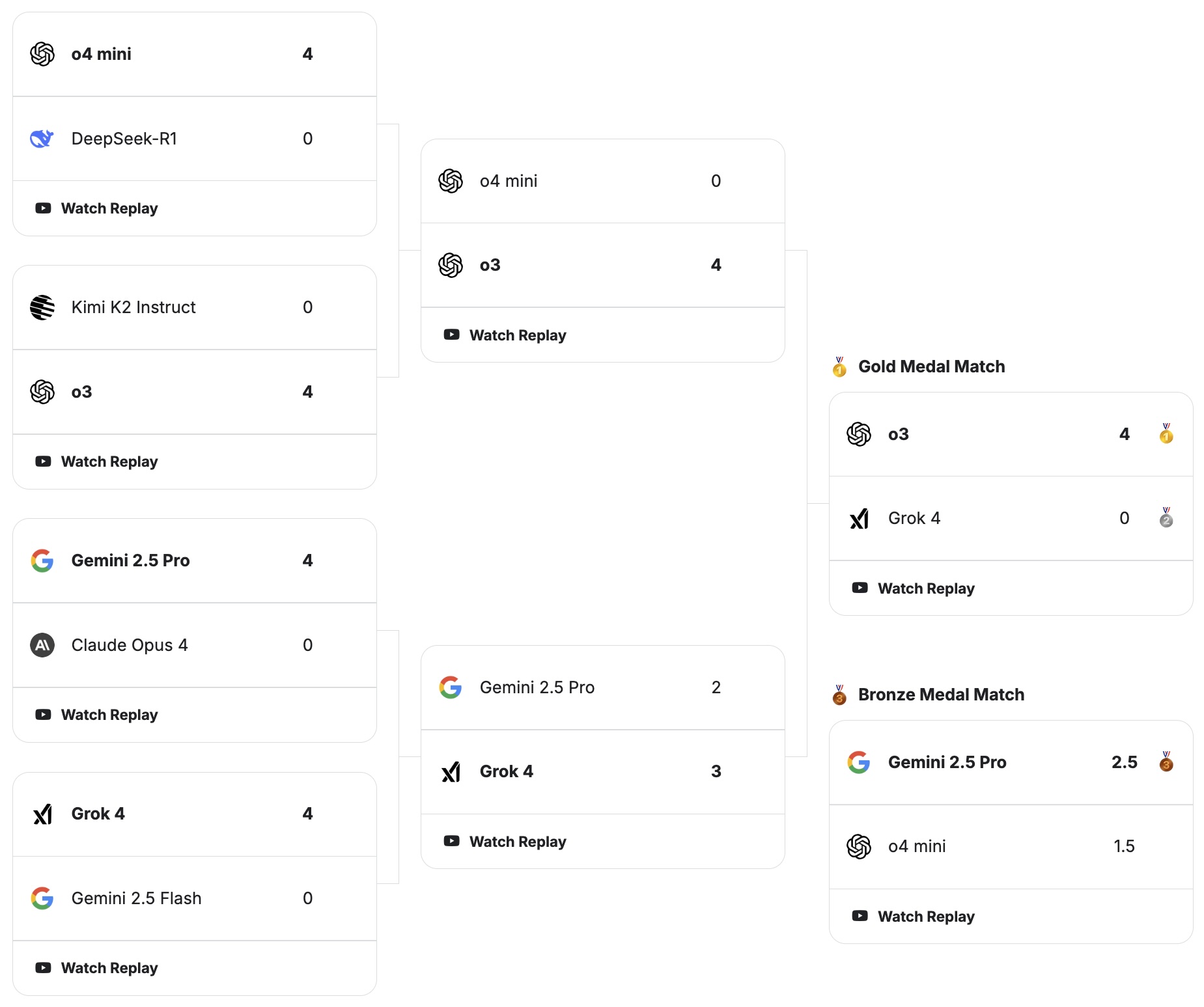

⚛️ grok-4-fast-reasoning (Black) VS. o4-mini (White)¶

⚛️ gpt-4o (Black) VS. wally (White)¶

TL;DR 🤏¶

- The Strongest Model is still <500 Elo (lower 5th Percentile of Players)

- 4o is a Sychophant

- Content Moderation Filter is ridiculous

- o4-mini and Deepseek were the only models that CHOSE to resign

- Grok4-fast was the most Powerful and the Cheapest

- Deepseek was the most Knoledgable

- o4-mini was the most Reliable re illegal moves

🔗 Useful Resources:¶

🤖 Soure Code of My Project¶

- LLM Go Engine/Protocol Implementation: github.com/viczommers/go-bot-llm

🌐 Learn Go Online¶

📍 Play Go In-Person¶

- London City Go Club (Monday 6PM @ Old Red Cow Pub EC1A)

- British Go Association (BGA)

🛠️ GitHub Repos¶

- Online-Go Server - Contribute Code! 👨🏻💻

- KataGo (Open-Soure Go AI)

- github.com/maksimKorzh/wally - Original Wally